- #INSTALL APACHE SPARK FROM UBUNTU HOW TO#

- #INSTALL APACHE SPARK FROM UBUNTU INSTALL#

- #INSTALL APACHE SPARK FROM UBUNTU UPDATE#

- #INSTALL APACHE SPARK FROM UBUNTU SOFTWARE#

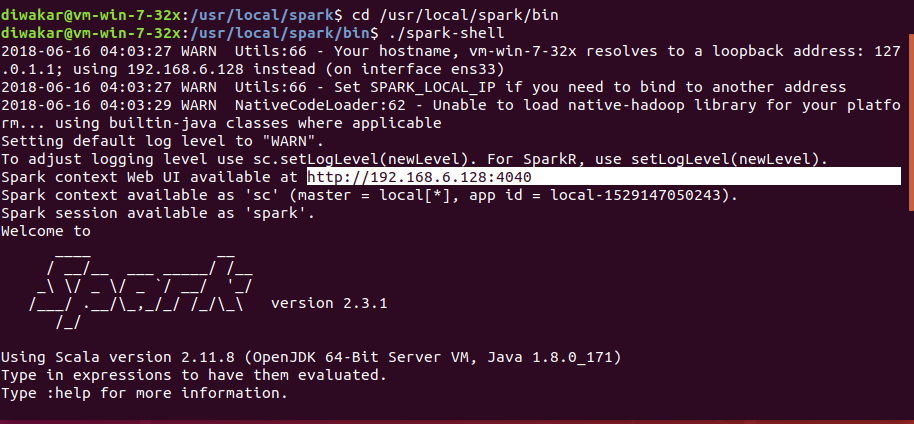

Add dependencies to connect Spark and Cassandra Connect via SSH on every node except the node named Zookeeper : Step 12: Set Slaves (localhost in our case)Ĭopy Template sudo cp slaves. Step 10: Set Spark Environment Properties cd /usr/local/spark/confĬopy template sudo cp spark-env.sh.template spark-env.shĪdd Properties to File export SCALA_HOME=/usr/local/scalaĮxport JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64Ĭopy Template sudo cp nfĪdd Properties To File. Step 9: Set Environment Properties sudo gedit $HOME/.bashrcĪdd Below Properties export SCALA_HOME=/usr/local/scalaĮxport PATH=$SPARK_HOME/bin:$JAVA_HOME/bin:$SCALA_HOME/bin:$PATHĪfter Saving the File, reload environment source $HOME/.bashrc Move sudo mv spark-2.3.0-bin-hadoop2.7/* /usr/local/spark cd DownloadsĮxtract sudo tar xzf spark-2.3.0-bin-hadoop2.7.tgz In my case they are in the downloads folder.

Switch to the directory where you saved the files downloaded in the pre-requisites section. Step 6: Create Spark Temporary Directory sudo mkdir /appĬhange Ownership to sparkuser sudo chown -R sparkuser /app/spark/tmp Step 5: Create Scala Directory and Set Permissions sudo mkdir /usr/local/scalaĬhange Ownership to sparkuser sudo chown -R sparkuser /usr/local/scala Step 4: Create Spark Directory and Set Permissions sudo mkdir /usr/local/sparkĬhange the folder Ownership to sparkuser sudo chown -R sparkuser /usr/local/spark Sudo adduser -ingroup sparkgroup sparkuserĬonfigure Permissions for User sudo gedit /etc/sudoersĪdd the below line to the file: sparkuser ALL=(ALL) ALL Step 3: Create User to Run Spark and Set Permissions sudo addgroup sparkgroup Next up we create a group and a user under which spark will run. If you are virtualizing Ubuntu with Virtual-Box, use the following settings to be able to reach your server.

#INSTALL APACHE SPARK FROM UBUNTU INSTALL#

Step 2: Install SSH Server sudo apt-get install openssh-server

#INSTALL APACHE SPARK FROM UBUNTU UPDATE#

Step 1: Install Java 8 sudo apt-get update We will also install SSH server to allow us to SSH into our Ubuntu. Spark 2.3 requires Java 8, that is where we will begin. Open up your Ubuntu Terminal and follow the following steps. You want to download the Scala Binaries for unix I am using Scala 2.16 which would download the file: scala-2.12.6.tgz Spark Installation Steps Go ahead and download the spark-2.3.0-bin-hadoop2.7.tgz file. We will be using the latest version 2.3 for Hadoop 2.7 and later which you can do here: To get started you need a clean install of Ubuntu 16.04 LTM and download Spark.

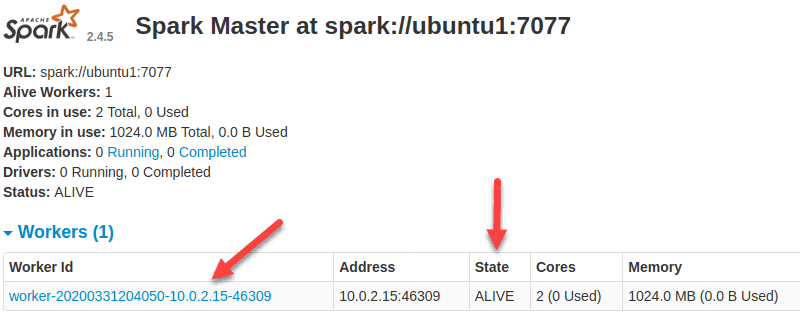

Other methods to deploy a Spark Cluster include: This is the easiest way to get started with Spark utilizing the included cluster manager that comes prepackaged with Spark. The first (which we will use) is in Standalone mode. Spark can be deployed (as of this writing) in four different ways.

#INSTALL APACHE SPARK FROM UBUNTU HOW TO#

I will show you how to install Spark in standalone mode on Ubuntu 16.04 LTS to prepare your Spark development environment so that you can begin playing with it. On the other hand, starting to use it is not too straight forward which is the reason for this article. Did I mention it is extremely fast? Yes it is, and there is much hype around it.

#INSTALL APACHE SPARK FROM UBUNTU SOFTWARE#

Originally developed at the University of California at Berkley, it’s codebase was donated to the Apache Software Foundation and as of today, it is the largest open-source project in data processing. Apache Spark Installation Guide on Ubuntu 16.04 LTSĪpache Spark is an open-source general-purpose cluster computing engine designed to be lightning fast. Hackdeploy Follow I enjoy building digital products and programming.

0 kommentar(er)

0 kommentar(er)